Converting images to data¶

Marvin can use OpenAI's vision API to process images and convert them into structured data, transforming unstructured information into native types that are appropriate for a variety of programmatic use cases.

What it does

The cast function can cast images to structured types.

How it works

This involves a two-step process: first, a caption is generated for the image that is aligned with the structuring goal. Next, the actual cast operation is performed with an LLM.

Example: locations

We will cast this image to a Location type:

Example: getting information about a book

We will cast this image to a Book to extract key information:

Instructions¶

If the target type isn't self-documenting, or you want to provide additional guidance, you can provide natural language instructions when calling cast in order to steer the output.

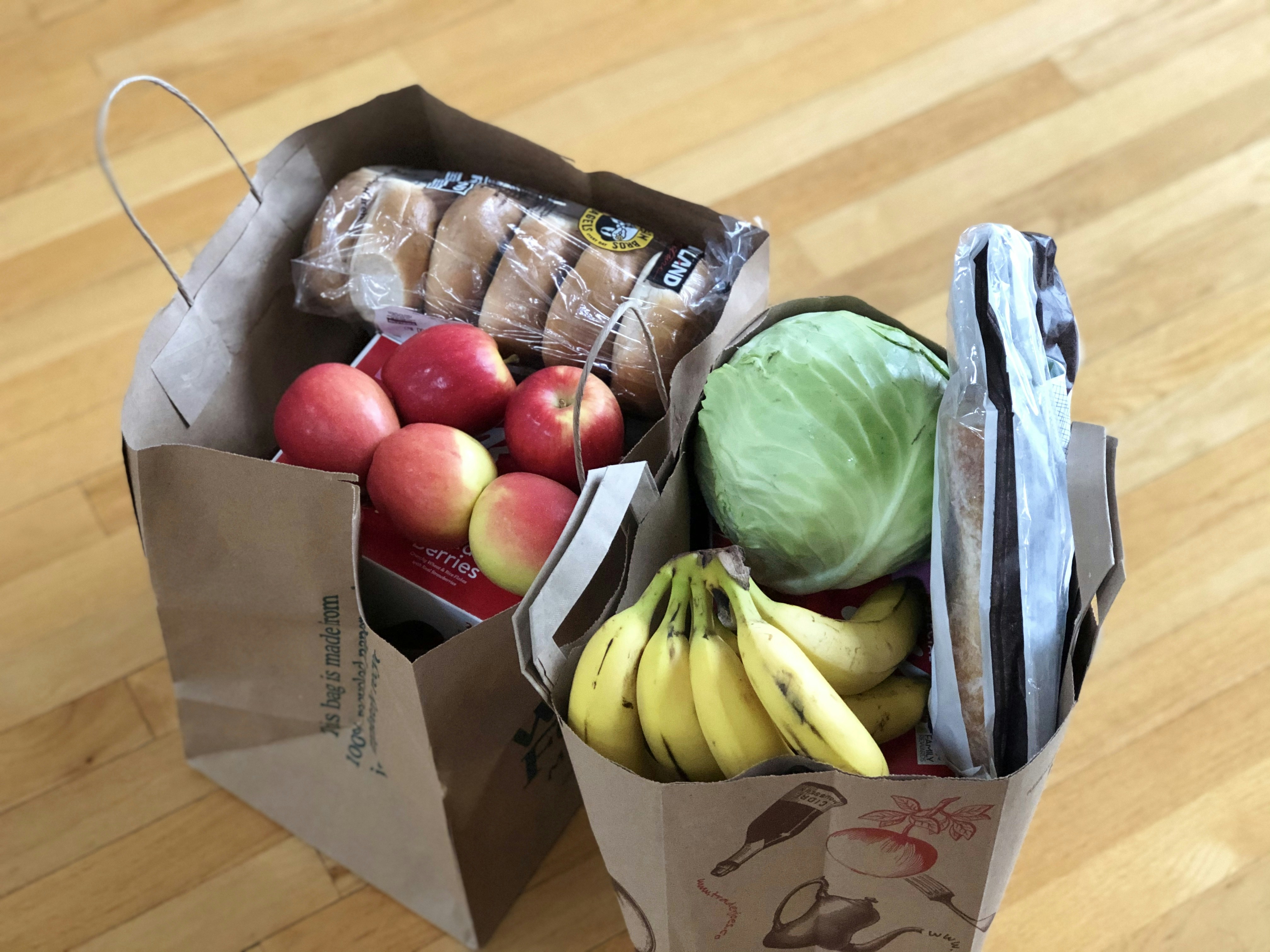

Example: checking groceries

Let's use this image to see if we got everything on our shopping list:

Model parameters¶

You can pass parameters to the underlying API via the model_kwargs argument of cast. These parameters are passed directly to the API, so you can use any supported parameter.

Async support¶

If you are using marvin in an async environment, you can use cast_async:

Mapping¶

To transform a list of inputs at once, use .map:

inputs = [

"I bought two donuts.",

"I bought six hot dogs."

]

result = marvin.cast.map(inputs, int)

assert result == [2, 6]

(marvin.cast_async.map is also available for async environments.)

Mapping automatically issues parallel requests to the API, making it a highly efficient way to work with multiple inputs at once. The result is a list of outputs in the same order as the inputs.